“It’s all about discriminating between the sources of climate variation. As long as these sources are not at least calibrated to order of magnitude, attribution will continue to be hopeless.”

– by Paul Pukite Jan 2024 on RC

About the recent heat records, ENSO, shipping exhausts, other possible drivers, unknown short term future shifts, Climate sensitivity, ECS, CMIPs (models), 2019 “hot models”, IPCC predictions (forecasts and Models), UK MetOffice “hot” Model, Hansen et al 2023, Criticisms, ECS +5C can’t be easily dismissed, the next 20 years?

– I can tell you that my most disliked videos, by far, are those on climate change. Doesn’t

matter if it’s good news or bad news, some people it seems reflexively dislike anything about

the topic.

– Now, it’s possible that 2023 was somewhat of an outlier, and average temperatures

will somewhat decrease in the next few years. There are several reasons for this.

– So, next year might break more records because it’ll still be El Nino, but

in 2 or 3 years, we might see a slight cooling.

– Be that as it may, I worry that even if 2024 is not a new record breaker, the overall trend in the

next years will be steeply up and the situation is going to deteriorate rapidly. The reason is

to do with a quantity called climate sensitivity. (ECS)

– (ECS) it’s a useful quantity to gauge how strongly a model will react to changes

in carbon dioxide. And this climate sensitivity is the key quantity that determines the predictions

for how fast temperatures are going to rise if we continue increasing carbon dioxide levels.

Up to 2019 or so the climate sensitivity of the world’s most sophisticated climate

models was roughly between 2 and 4.5 degrees Celsius. These big climate models

are collected in a set that’s called the Coupled Model Intercomparison Project,

CMIP for short. That’s about 50 to 60 models and is what the IPCC reports are based on.

So, until a few years ago, we have a climate sensitivity of 2 to 4 point 5 degrees or so,

and that’s what we came to work with, that’s what all our plans rely on, if you can even call them

plans.

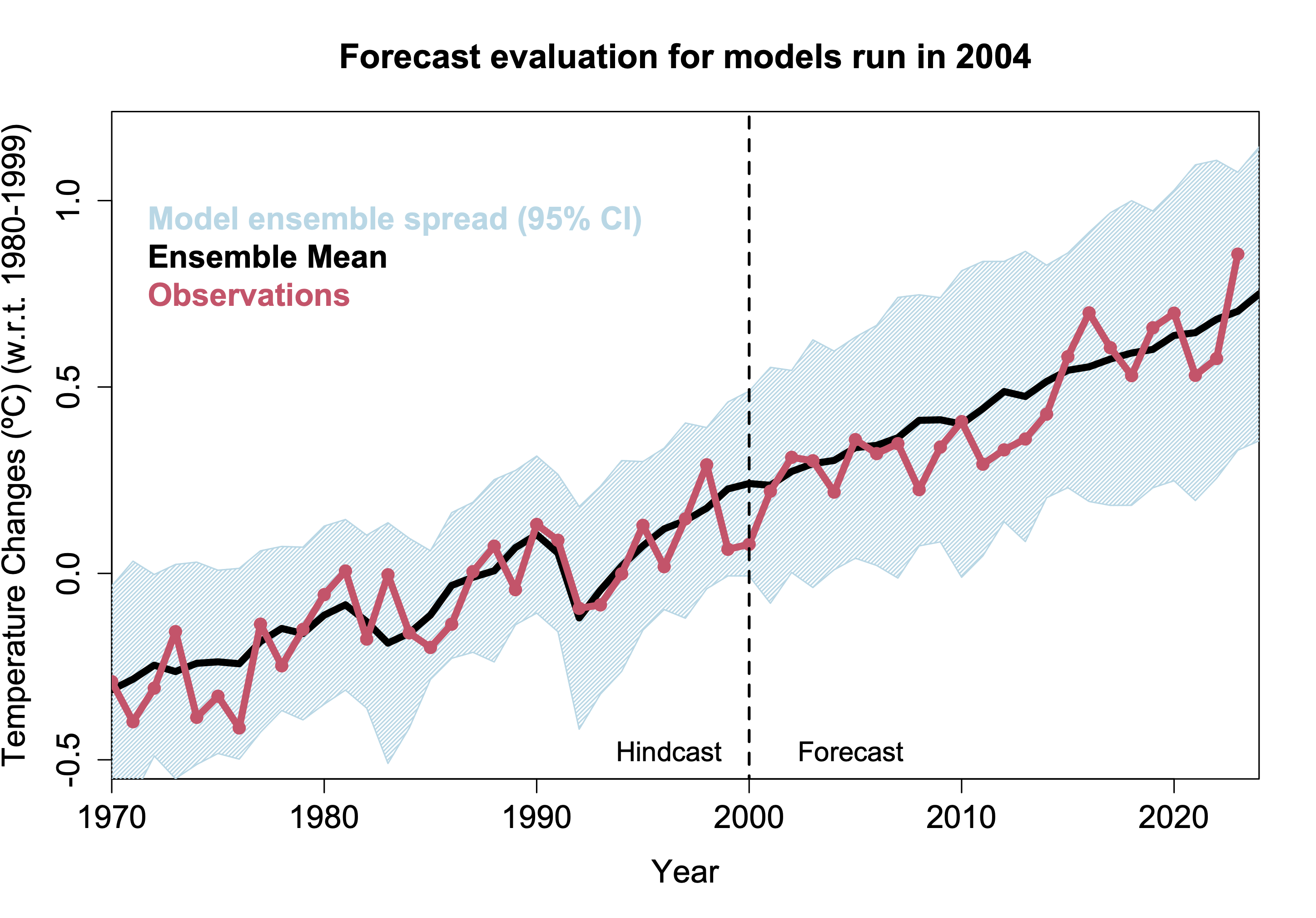

You have probably all seen this range in the IPCC projections for the temperature increase

in different emission scenarios. It’s this shaded region around the mean value. Loosely speaking,

the lowest end is the lowest climate sensitivity, the highest end the highest climate sensitivity.

Then this happened. In the 2019 model assessment, 10 out of 55 of the models

had a climate sensitivity higher than 5 degrees Celsius. That was well outside

the range that was previously considered likely. If this number was correct, it’d

basically mean that the situation on our planet would go to hell twice as fast as we expected.

Ok, you might say, but that was 5 years ago, so why haven’t we ever heard of this?

What’s happened is that climate scientists decided there must be something wrong with those models

which gave the higher climate sensitivity. They thought the new predictions SHOULD

agree with the old ones. In the literature, they dubbed it the “hot models” problem,

and climate scientists argued that these hot models are unrealistic because such

a high climate sensitivity isn’t compatible with historical data.

This historical data covers many different periods and reaches back to a few million years ago when

we still used dial-up modems. It’s called the “paleoclimate data”. Of course we don’t have

temperature readings from back then, but there’s lots of indirect climate data in old samples,

from rocks, ice, fossils and so on. In 2020, a massive study compiled all

this paleoclimate data and found that it fits with a climate sensitivity between

2.6 and 3.9 degrees Celsius. And this means implicitly that the “hot” models,

the ones with the high climate sensitivity, are not compatible with this historical data.

As a consequence, the newer IPCC reports now weigh the relevance of climate models by how

well the models fit the historical data. So the models with the high climate sensitivity

contribute less to the uncertainty, which is why it has barely changed.

And that sounded reasonable to me at first. Because if a model doesn’t match with past

records, there’s something wrong with it. Makes sense. Then I learned the following.

The major difference between these hot models and the rest of the pack is how they describe

the physical processes that are going on in clouds. A particular headache with clouds is

the supercooled phase of water, that’s when water is below the freezing point but remains

liquid. The issue is that the reflectivity of the clouds depends on whether it’s liquid or not, and

that supercooling makes the question just exactly what influence the clouds have very complicated.

But how much data do we have about how clouds behaved a million years ago? As you certainly

know, the dinosaurs forgot to back up their satellite images, so unfortunately all that

million-year-old cloud data got lost and we don’t have any. To use the ARGUMENT from historical

data, therefore, climate scientists must ASSUME that a model that is good for clouds in the

CURRENT CLIMATE was also good for clouds back then, under possibly very different circumstances,

without any direct data to check. That seems to me a very big “if” given that getting the clouds

right is exactly the problem with those models. Wouldn’t it be much better to check how well

those models work with clouds for which we do have observations.

Like you know, the ones that we see on the sky? In principle, yes, in practice, it’s difficult. That’s

because most climate models aren’t any good as weather models. While the physics is the same,

they’re designed to run on completely different time scales. You make a weather forecast two

weeks at most. But climate models you want to run a hundred years into the future.

There is one exception to this. There is one of the “hot” climate models that can

also be used as a weather model with only slight adaptations. It’s the one from the

UK Met Office. So a small group from the UK met office went and used this “hot” model to make

a 6 hour weather forecast. They compared the forecast from the “hot” model with a

forecast from an older version of the same model that didn’t have the changes in the

cloud physics and was somewhat “colder”. They found that the newer model, the “hotter” one,

gave the better forecast. And just so we’re on the same page, when I say the forecast was better,

I don’t mean it was all sunny, I mean it agreed better with what actually happened. And that

model with the better predictions had a climate sensitivity of more than 5 degrees Celsius.

I know this all sounds rather academic, so let me try and put this into context. The

climate sensitivity determines how fast some regions of our planet will become

uninhabitable if we continue pumping carbon dioxide into the atmosphere. The regions to

be affected first and most severely are those around the equator, in central Africa, India,

and South America. That’s some of the most densely populated regions of the world. The

lives of the people who live there depend on that scientists get this number right.

So we need this number to get a realistic idea of how fast we need to act. That the

climate sensitivity might be considerably higher than most current policies assume

is a big problem. Why wasn’t this front page news?

Well, quite possibly because no one read the paper. I didn’t either. As of date it’s been

cited a total of 13 times. I only know about this because my friend and colleague Tim

Palmer wrote a comment for Nature magazine in which he drew attention to this result.

– sorry Tim, and in all honesty I had pretty much forgotten about this. But then late last year,

a new paper with Jim Hanson as lead author appeared which reminded me of this.

The new Hanson et al paper is a re-analysis of the historical climate data. In a nutshell,

they claim that the historical data is compatible with a climate sensitivity

of 4.8 plus minus 1.2 degrees Celsius (that is 3.6 to 6.0 C). That’s agrees

with the “hot models.” And if it was right, it’d invalidate the one reason that climate

scientists had to dismiss the models with the higher climate sensitivity.

I don’t want to withhold from you that some climate scientists have criticised the new

Hanson et al paper. They have called it a (quote) “worst-case scenario” that is “quite subjective

and not justified by observations, model studies or literature.” Though it isn’t irrelevant to

note that the person who said this is one of the authors of the previous paper that claimed the

climate sensitivity from historical data is lower. I don’t know who’s right or wrong.

But for me the bottom-line is that the possibility of a high climate sensitivity above 5 degrees

Celsius can’t be easily dismissed, especially not seeing how fast average temperatures have

been rising in recent years. And that’s really bad news.

Because if the climate sensitivity is indeed that high, then we have maybe 20 years or so until

our economies collapse, and what’s the point of being successful on YouTube if my pension

savings will evaporate before I even retire.

takes us to 13 mins of 21 mins ………………… feel free to watch/read the rest of the video yourself, or don’t.

A well known popular, and long proven to be an effective science communicator, Sabine Hossenfelder has a PhD in physics.

She is author of the books “Lost in Math: How Beauty Leads Physics Astray” (Basic Books, 2018) and “Existential Physics: A Scientist’s Guide to Life’s Biggest Questions” (Viking, 2022).

As simple as possible, but not any simpler! Science and technology updates and summaries.

No hype, no spin, no tip-toeing around inconvenient truths.